First Speaker: VP of Amazon Web Services – Adam Selipsky

Motivation for building AWS – Scaling Amazon.com through the 90’s was

really rough. 10 years of growth caused a lot of headaches.

What if you could outsource IT Infrastructure? What would this look like?

Needs:

Storage

Compute abilities

Database

Transactions

Middleware

Core Services:

Reliability

Scalability – Lots of companies have spiky business periods

Performance – CoLo facility and other silos in the past have shown that developers do not want slowness and wont accept it

Simplicity – No learning curve or as little as possible

Cost Effective – Prices are public and pay as you go. No hidden fees. Capital expenses cut way down for startups

Initial Suite of services: S3, EC2, SimpleDB, FPS, DevPay, SQS, Mechanical Turk

Cloud

Computing is a buzz word and allowing infrastructure to be managed by

someone else. Time to market is huge since you dont have to buy boxes,

CoLo hosting, bandwidth, and more.

Second Speaker: Jinesh Varia, Evangelist of AWS

Promise to see their roadmap for the next 2 years.

Amazon has 3 business units

Amazon.com, Amazon Services for Sellers, and Amazon Web Services

Spent 2 billion on infrastructure costs already for AWS

Analogy

– Electricity generated somewhere else doesnt really add any value.

There is a certain amount of undifferentiated services. Server

Hosting, Bandwidth, hardware, contracts, moving facilities, … Idea to

product delay is huge.

Example of Animoto.com

They own no hardware. None. Serverless startup.

They went from 40 servers to 5000 in 3 days. Facebook app. Signed 25,000 users up every hour

Use Cases

Media Sharing and Distribution

Batch and Parallel Processing

Backup and Archive and Recovery

Search Engines

Social Netowrking Apps

Financial Applications and Simulations

What do you need?

S3, EC2, SimpleDB, FPS, DevPay, SQS, Mechanical Turk

S3

50,000 Transactions Per Second is what S3 is running right now.

99.9% Uptime

EC2

Unlimited Compute power

Scale Capacity up or down. Linux and OpenSolaris (uggh, Solaris) are accepted

Elastic Block Store is finally here! Yay!

SimpleDB

Not a Relational, no SQL. But highly available and highly accessible. Index Data…

SQS

Acts as a glue to tie all services together. Transient Buffer? Not sure how I feel about that.

DevPay and FPS

Developers get to use Amazon’s Billing Infrastructure. Sounds lame and sort of pyrimad schemey

Mechanical Turk

Allows

you to get people on demand. Perfect for high-volume micro tasks.

Human Intelligence tasks. Outsource dummy work I guess… Not sure.

Sample Architecture

Podango

He wrote a Cloud Architecture PDF

Future Roadmap

Focus on security features and certifications

Continued focus and operational excellence

US and international expansion

Localization of technical resources

Amazon

EC2 GA and SLA – Out of Beta and SLA delivered << This is really

good for us! Now if gmail would get out of beta after 5 years!

Windows Server Support

Additional services

Amazon Start-Up Challenge is open. 100K

aws.amazon.com/blog

Jinesh Varia, jvaria@amazon.com

Customer Testimonials

Splunk used AWS to host a development camp and start an instance. Email instructions and SSH keys. Free, Open Source. DevCamp.

Fabulatr at @Google Code It starts up an instance gets it ready, sends email with ssh key to user

Another Use Case – Sales Engineering – POC, Joint work with Support, A place to play, Splunk Live Demo

Splunk blog and there are some videos on blog.

Put splunk in your cloud

Resources

download.splunk.com

blogs.splunk.com/thewilde -> Inside the Cloud Video

code.google.com/p/fabulatr

Rightscale, cant use elastic fox from iPhone, you can use RightScale

OtherInbox

Launched

on Monday. Helps users manage inbox. Emails from OnStar, Receipts

from Apple. OtherInbox allows me to give out different addresses.

facebook@james.otherinbox.com

Seems like a cool app.

Use Google Docs to grab information ad hoc.

They use DB’s on EBS in a Master/Slave relationship for SQL, formerly on EC2 w/o EBS, now EBS is awesome.

Built on Ruby on Rails > MVC and SproutCore (JavaScript framework)

austincloudcomputing.com

MyBaby Our Baby

Share, Organize, Save all of the videos and pictures for kids

Invite friends and family to your site, they get emails about your kids when you add content

Other people can add photos of your children and pictures from other parents (at the park, babysitter, …)

Uses S3 only

Architecture for LB

Two Front End Load Balancing Proxy Servers that hit the right app servers.

Need

to read on Scalr (Pound) HAProxy was also recommended. He also

mentioned that Scalr is cool, but AWS is coming out with a LB and tool

for us to use. He said to give it some time, but they would have

something for us!

http://aws.typepad.com/aws/2008/04/scalr-.html

GoDaddy vs AWS. GoDaddy sucks… but under all circumstances, “you need a geek” to get this running.

You

need a Linux System Administrator under all circumstances and a lot of

people seemed miffed by this. I dont see what the big deal is and

under the AWS scenario, you don’t need all the infrastructure

(hardware) needed before and you need a lot less people than the

traditional model. You always still need someone who knows how to work

the systems, but now you need fewer and you really need people that are

linux admins but also web admins that know traditional web services and

applications. There will never be a magic button that just spins up

servers ready to go for your unique app, Amazon makes it easier, but

you still need a geek… They make the world work…

Amazon has a long track record for success and there is a lot of trust from Other Inbox.

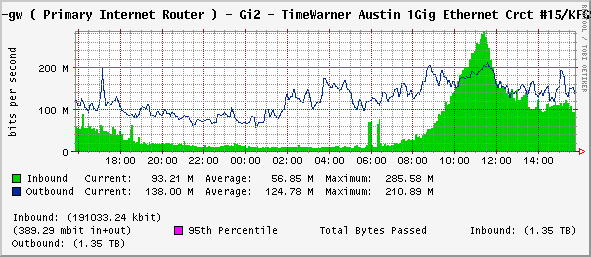

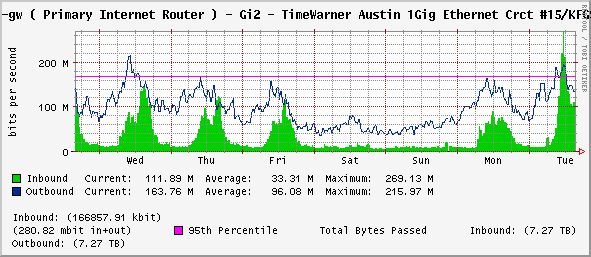

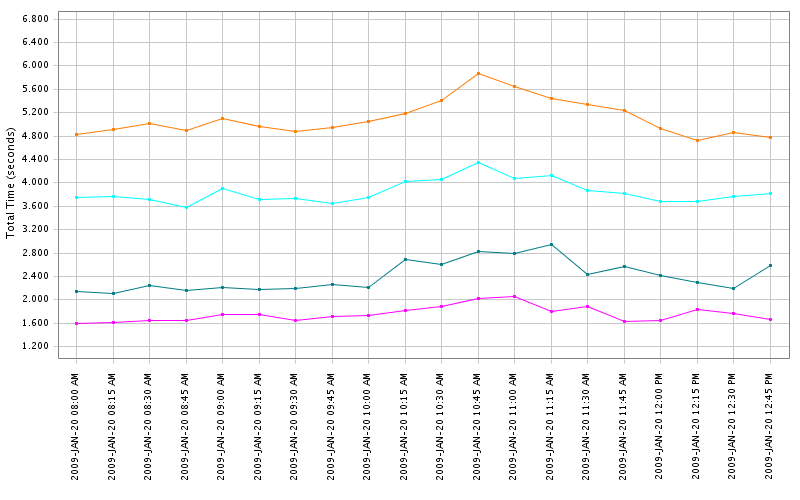

Looking at the traffic specifically, there were two main standouts. We had TCP 1935, which is Flash RTMP, peaking around 85 Mbps, and UDP 8247, which is a special CNN port (they use a plugin called “Octoshape” with their Flash streaming), peaking at 50 Mbps. We have an overall presence of about 2500 people here at our Austin HQ on an average day, but we can’t tell exactly how many were streaming. (Our NetQoS setup shows us there were 13,600 ‘flows,’ but every time a stream stops and starts that creates a new one – and the streams were hiccupping like crazy. We’d have to do a bunch of Excel work to figure out max concurrent, and have better things to do.)

Looking at the traffic specifically, there were two main standouts. We had TCP 1935, which is Flash RTMP, peaking around 85 Mbps, and UDP 8247, which is a special CNN port (they use a plugin called “Octoshape” with their Flash streaming), peaking at 50 Mbps. We have an overall presence of about 2500 people here at our Austin HQ on an average day, but we can’t tell exactly how many were streaming. (Our NetQoS setup shows us there were 13,600 ‘flows,’ but every time a stream stops and starts that creates a new one – and the streams were hiccupping like crazy. We’d have to do a bunch of Excel work to figure out max concurrent, and have better things to do.)